|

||

|

Director, Operational Test & Evaluation |

||

| FY97 Annual Report | ||

FY97 Annual Report

DIRECTOR'S INTRODUCTION

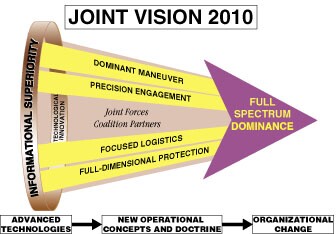

| Joint Vision 2010 is the conceptual framework for how U.S. forces will fight in the future. The reports on individual systems in this volume identify the contribution each system makes to Joint Vision 2010. Each system is developing measures of effectiveness and suitability that directly relate to the four new operational concepts in Joint Vision 2010: dominant maneuver, precision engagement, full-dimensional protection, and focused logistics. In this report we begin the process of putting each evaluation in the context of Joint Vision 2010. |

The QDR defined a plan to rebalance our defense programs to pay for the transformation of our forces required by the shape-respond-prepare strategy in the QDR. Its reduction in military end-strength and force structure will be offset, in the plan, by enhanced capabilities of new systems and streamlined support structures. Testing, of course, plays a key role in defining what combat capabilities a system actually has. This volume continues to report on combat effectiveness and suitability, survivability and lethality. As part of the shift in resources as a result of the QDR, the Secretary directed additional funds to National Missile Defense, Battlefield Digitization, and Chemical/Biological Defense. My office also has budgeted additional resources to support these programs because of their importance, tight schedules, and technical risk.

The Defense Reform Initiative redefines how the Defense Department conducts business through its organization, infrastructure, and business practices. The initiative includes adopting best business practices, streamlining the organization, making full use of commercial activities, and eliminating unneeded infrastructure. Electronic business operations is the first practice encouraged with a goal of making all aspects of contracting for major weapons systems paper free by the year 2000. In support of this practice, the 1997 DOT&E Annual Report will be the third edition available in unclassified version on the internet (www.dote.osd.mil), and we will begin a process to make the test and evaluation master planning process paper free. In addition, the initiative hopes to consolidate, restructure, and regionalize many support activities to achieve economies of scale which may have a significant effect on the testing ranges. The Congress has asked for an independent evaluation of test resource adequacy. This annual report notes later in this introduction, and also in the Test Resources section, some of the general and specific deficiencies.

Lastly, the report of the National Defense Panel, Transforming Defense:

National Security in the 21st Century, commented directly

on the challenge to OT&E: "Transformation will take dedication and

commitment--and a willingness to put talented people, money, resources,

and structure behind a process designed to foster change. Greater emphasis

should be placed on experimenting with a variety of military systems, operational

concepts, and force structures." I discuss our contribution to this vision

in the section on congressionally supported operational field assessments.

THE REVOLUTION IN MILITARY AFFAIRS

For Test and Evaluation this will require the end-to-end testing of battlefield operational concepts that link together the series of functions that must be accomplished in order to carry out critical operational tasks. As Secretary Cohen has said, "It is essential that new operational concepts be tested by a full range of joint and service warfighting experiments to develop a new joint doctrine."

Improved intelligence collection and assessment, as well as modern information processing and command and control capabilities, are at the heart of the RMA. During the last year, the Unmanned Air Vehicle and Joint STARS surveillance, target attack, and reconnaissance platforms have worked to improve their test planning and test execution. I call your attention to the detailed reports in this volume. In addition, the results of Army field experiments with battlefield digitization are reviewed in the section on Army Advanced Warfighting Experiments under Digitization of the Battlefield.

Over the last few years, there has been increased awareness that individual "black boxes" by themselves are not the answer. The Department now employs a system-of-systems approach that links surveillance and reconnaissance, intelligence assessment, command and control, mission preparation, and mission execution in an "End-to-End Battlefield Operational Concept." The stated goal of this end-to-end approach is to leverage new technologies to accomplish critical tasks that must be carried out to implement the National Defense Strategy. Traditionally, in Test and Evaluation we have emphasized the need to test and evaluate in precisely this end-to-end fashion. In this regard, I particularly call your attention to the review of the Army Tactical Missile System (ATACMS) Block IA.

Promising new battlefield operational concepts are often tested and refined during the conduct of advanced concept technology demonstrations (ACTDs). As reported last year, the Secretary has asked DOT&E and the Service Operational Test Agencies (OTAs) to actively assist in ACTD programs. When the results of these demonstrations indicate improved warfighting capability can be achieved, the concepts can be expeditiously integrated into the acquisition process. This volume contains reports on the following ACTDs: Predator, High Altitude Endurance, and EFOG-M.

The Services have embarked on an ambitious concept development and testing

process that involves warfighting centers, battle labs, and warfighting

experiments. New concepts considered operationally feasible are demonstrated

early in field experiments. As new operational concepts and technologies

are proven, they will lead to changes in the organization, employment of

forces, and doctrine. Operational testing also should change to examine

these options.

FY97 ACTIVITY -- focused on four Joint Vision 2010 Operational Concepts

In evaluating new systems, we assess how well each system accomplishes one or more of the four operational concepts that comprise Joint Vision 2010.

Dominant Maneuver requires U.S. forces to be:

- Lighter and more versatile, with logistics based at sea, and centralized combat service support functions at higher tactical levels.

- More mobile and lethal through greater reliance on netted firepower.

- More flexible in available strategic and tactical sea and air lift.

Precision Engagement is intended to shape the battlefield through near real-time information on the objective or target, a common awareness of the battlespace, greater assurance of generating the desired effect due to more accurate delivery with increased survivability, and rapid assessment of the results of the engagement and accurate reengagement, if required.

One of the precision engagement systems that received attention this year was the B-2. I briefed both the House and Senate Authorization Procurement Subcommittee conferees and staff members of the National Security Subcommittee on the B-2. I emphasized the costs and complexities of low observable maintenance and the need for further testing of certain B-2 subsystems. Our office is in a unique position to view cross-Service capabilities. In this case during last year, we also sponsored a demonstration for the Air Force of Navy Low Observable diagnostic equipment. Based on this, the Navy is now working with the Air Force to provide the B-2 with an interim diagnostic tool.

Another program important to precision engagement is ATACMS-IA which was found to be not operationally effective and not operationally suitable. A plan to correct deficiencies and retest has been formulated. Consistent with the Army Acquisition Executive Decision Memorandum for ATACMS-IA, the evaluation will be done in an end-to-end battlefield operational context that includes detection, location, targeting, lethality, survivability, and reliability. Such end-to-end tests are essential to understand the real military capability the system offers, as opposed to its technical performance in isolation. This has been stressed by the Department's emphasis on "End-to-End Battlefield Operational Concepts" as mentioned earlier. However, such testing while particularly informative is also resource intensive. Under normal conditions the only time all the combat systems are together is during training exercises. As a result, I have encouraged cooperation between the testing and unit training or exercises at all echelons.

Full-Dimensional Protection, among other goals, seeks to develop and deploy a multi-tiered theater air and missile defense architecture. The sections on THAAD and NMD detail our efforts to strengthen their test programs especially to improve early ground-based testing. Additionally, the need to improve protection against chemical and biological weapons has led me to increase our efforts in this area. The section on the NBC/RS outlines some of the difficulties that are encountered in testing these types of systems. Finally, in conjunction with the National Security Agency, we have begun to plan a strategy for testing systems to defend against asymmetric attacks on information systems, infrastructure, and other critical areas.

Focused Logistics is intended to reduce the size of logistics support while helping to provide more agile, leaner combat forces. Initiatives hope to eliminate redundant requisitions and reduce delays in the shipment of essential supplies. The DRI goal is "Replacing the traditional military 'just-in-case' mindset for logistics with the modern business 'just-in-time' mindset." At least one evaluation during this last year (Combat Service Support Computer System) has indicated both the difficulty of doing this and the misguided tendency to use technology to automate an old way of doing business without changing the accompanying doctrine.

Each assessment in this volume puts the system under discussion in the

context of the Operational Concepts most relevant to it.

EXPERIMENTATION

As the Secretary's Annual Report to the President and the Congress says this year, "Today, the world is in the midst of an RMA sparked by leap-ahead advances in information technologies. There is no definitive, linear process by which the Department can take advantage of the information revolution and its attendant RMA. Rather, it requires extensive experimentation both to understand the potential contribution of emerging technologies and to develop innovative operational concepts to harness these new technologies." Later in his report, the Secretary makes the point we already alluded to, that ". . . it is essential that (new battlefield operational concepts) be tested by a full range of joint and Service warfighting experiments . . . ."

During this year, the Army conducted an experiment that should be a model for all the Military Services: the Advanced Warfighting Experiment for Task Force XXI.

Very early in the process, the Army brought together the materiel developers, the users, the operational testers, and the requirements people. The overall process began at Fort Hood and Fort Rucker and culminated at the National Training Center. I believe the process started there could, with proper follow-through, save years, perhaps decades, in the development of the systems involved.

Later in the year, a Division-level experiment was also conducted. My office continues to monitor and participate in these activities.

All the Military Services are moving toward increased experimentation

through battle labs, joint and multi-Service exercises, in operational

field assessments, and in ACTDs. The Senate Armed Services Committee, the

Defense Science Board, the National Defense Panel, and the Service Chiefs

have all urged greater emphasis on experimentation. I strongly encourage

this direction myself. The active participation of the OT&E community

will be essential to realize the value from increased experimentation.

EXTERNAL REPORTS ON DOT&E ACTIVITY

DOT&E has vital, legislated responsibilities and, as a result, we regularly support external reviews of our performance. Last year there were two external audits of DOT&E: a GAO report on the "Impact of DOD?s Office of the Director of Operational Test and Evaluation" and a DoD IG report entitled "Live-Fire Test and Evaluation of Major Defense Systems." In addition, the National Academy of Sciences has just completed a 3-1/2-year panel study that assesses statistical issues as well as broader issues on how to best use operational test and evaluation.

The last study of our office by the GAO was nine years ago in 1988. That study was quite critical of the performance of the office, and found fault with both the adequacy of test programs planned by the office and with inaccurate and incomplete reporting to Congress. By contrast, the current 1997 study finds that DOT&E is having an undeniable and positive influence on the quality of test and evaluation across the Department.

The current study notes that DOT&E?s workload is increasing, while its resources have declined 15 percent since 1990. The GAO notes that the number of systems requiring oversight is increasing, as are the demands from acquisition reform. For example, the features of acquisition reform, including participation in Integrated Process Teams, are adding to our workload. Nevertheless, I believe we must continue to emphasize early involvement, in support of the Secretary's guidance, and to identify and correct deficiencies as early as possible.

The GAO reports examples of systems where our work is helping the Services and programs to understand the strengths of new military systems, as well as the weaknesses. For example, the GAO notes that our work on the Longbow Apache helped to demonstrate a powerful new firing technique that had not previously been appreciated, and to demonstrate that the Longbow Apache had three times greater lethality than the Hellfire Apache (AH-64A), eight times greater survivability, and had zero instances of fratricide compared to 34 for the AH-64A.

The report found "that DOT&E oversight of operational test and evaluation increases the probability that testing would be more realistic and thorough" and that "the independence of DOT&E--and its resulting authority to report directly to Congress--is the foundation of its effectiveness." The GAO concluded that "DOT&E actions are resulting in more assurance that new systems fielded to our armed forces are safe, suitable, and effective."

The DoD IG came to a similarly positive overall conclusion in their report of Live Fire Test and Evaluation. It concluded "the Office of the Secretary of Defense and the Military Departments effectively implemented the LFT&E process from the programs reviewed."

The National Academy of Sciences report (available in pre-publication

form) identifies ways to improve efficiency in Operational Test and Evaluation

with respect to individual tests and as part of the broader acquisition

process. Their report gives detailed advice on the design of operational

tests; on modeling and simulation; on testing software-intensive systems;

and on testing reliability, availability, and maintainability. The panel

urges the use of earlier tests with operational realism as a key to making

testing in defense acquisition more efficient. More generally, they recommend

that DoD design objective, continuous measurement and feedback into the

acquisition system and archive data for analytical purposes and as the

basis for feedback to support evaluation of each step in the acquisition

process. In addition, they recommend that reports include explicitly an

assessment of the uncertainty in test results. These recommendations will

require some additional effort and resources to implement. I have allocated

resources in FY98 to accomplish many of their recommendations.

RESOURCES

The RMA has both direct and indirect impact on the resources required by my office and the much larger resources required by the test ranges and programs.

Resources for the Office of the Director, OT&E

Beginning in FY99, my office has budgeted approximately an additional $2.5 million a year for increased capability in the three areas of missile defense, chemical and biological defense, and battlefield digitization. The increase, while small in terms of overall defense spending, is a large fraction of DOT&E operational test funding. These areas are either areas of increased emphasis as a result of the QDR or part of the Service response to Joint Vision 2010.

The methodology that goes with the RMA adds resource requirements in a second way: it adds new responsibilities and new types of "tests" and activities we must watch. Principally, this is because we are designing both the weapons system and redesigning their concepts of operation at the same time. There must be a strong interaction between the design and potential new operational employment options. Otherwise we will end up with systems optimized to perform in the way we used to do business, not the way we want to do business. Early insight into the operational challenges to new concepts and potential system design options can come from activities such as the Army's Advanced Warfighting Experiments, the ACTD program, and the application, as systems are being designed, of modeling and simulation. Fostering each of these requires resources.

Last year I sponsored a series of conferences on modeling and simulation beginning with the Army and Navy, at which the Service acquisition leadership and I spoke to their program managers and program executive officers. The first round of conferences with the Army and Navy are done and the Air Force is upcoming. I encouraged them to plan for, budget for, and use "best business practices" with respect to modeling and simulation. The early insight and advance warning that can be gained using modeling and simulation is yet to be fully realized in the Department. I believe it is necessary to continue to urge this effort and I plan to do so.

CINC Partnerships and Operational Field Assessments (OFAs)

In FY96, the Secretary of Defense asked me to improve dialog with the warfighting CINCs and provide timely support of CINC requirements. During 1996 we began several initiatives through which operational testers can more fully support the users--the CINCs. New technologies, concepts, and threats have raised questions that can best be answered by field assessments. The answers to these questions can immediately help improve our current operating capability and inform us on new systems and how best to test them. During the planning phase for executing the Secretary's tasking and initiating a field experiment program with the Unified Commands, several factors became apparent. First, the Unified Commands, DOT&E, and the Services do not have adequate resources to conduct joint field experiments. Second, conventional OT of unique systems does not account for the near-term hybrid technology and diverse threats of the Unified Commands. Third, the end of the Cold War created a transformation to experiments that has affected acquisition and warfighting operational concepts, i.e., diverse threats and technologies requiring new concepts and doctrine based on experiments. Fourth, coordinated national-level operational and intelligence support was critical to provide accurate, timely, and focused support when addressing joint interoperability and operational issues. Finally, the OFA concept was a promising warfighter support initiative with strong potential to support the Secretary's tasking.

The OFA partners (DOT&E, DIA, NSA, and NRO) provide coordinated national-level support of CINC OFA requirements within their respective mission areas. The underlying rationale was to give the JCS and CINCs the ability to explore operational concepts and address critical operational issues with responsive, well-coordinated partnership support.

The demonstration phase, completed in FY97, has been highly successful and exceeded expectations not only in the ability to find solutions to critical CINC operational issues, but also in terms of enlightened understanding of unique command operational and intelligence requirements. Participating Unified Commands included Atlantic Command (ACOM), Central Command (CENTCOM), European Command (EUCOM), Special Operations Command (SOCOM), Southern Command (SOUTHCOM), and Transportation Command (TRANSCOM). Additional requirements were submitted from Strategic Command (STRATCOM), Pacific Command (PACOM), and U.S. Forces Korea (USFK) but could not be accommodated due to lack of funding in FY97.

Last year, with congressional approval, I used approximately $3 million of our budgeted dollars for this activity. In FY98 the activity has, under congressional guidance, a $ 4 million budget.

An OFA is a quick response, low-cost experiment conducted by the OFA partners and the Unified Command to support warfighting CINC requirements. It is conducted in a realistic field environment focused on CINC concerns regarding joint operational concepts against the diverse threat estimates within a given area of interest. OFAs rely on a dynamic process providing coordinated operational and intelligence support to the warfighting CINCs by the OFA partners within their mission and functional areas of responsibility. With coordinated support, the OFA is an effective tool for rapidly answering specific operational questions. While the assessments are correctly focused to meet a critical CINC need, they also serve to enhance the realistic portrayal of the threat during operational tests and provide the added benefit of clarifying and coordinating intelligence questions. The answers to these questions enable a sharper focus of follow-on resource expenditures in the collection and evaluation of new or more comprehensive intelligence gathering. The focus of intelligence support is also enhanced as a result of increased understanding of CINC operational needs and capabilities.

OFAs should be viewed as an experiment to support emerging operational

requirements. The rapid assessment process permits direct involvement of

the CINCs and allows time to correct deficiencies and assess new operational

concepts involving joint forces. This is substantiated by the National

Defense Panel Report of December 1997 which states the following:

- The advantage of the experiment is that there are some things that can only be revealed in the field.

- Practical experimenting allows us to experience what may only be theorized at the discussion table.

- It is only through field experiments, primarily joint in nature, that we can adjust and iron out problems before they occur in actual combat.

- Although each Service may be interested in doing experiments to examine its own role in the future, the real leverage of future capabilities from experiments is in the joint venue.

In FY97, the Congress allocated $3 million for the initiation of a Live Fire Testing and Training Initiative (LFT&TI) for the purpose of exploiting the exchange of technology development initiatives and uses between the live fire testing and military training communities. This initiative is resulting in projects which are enhancing both communities in the areas of vulnerability of mobile weapon systems, enhanced visual fidelity of simulated targets, more realistic assessment of battlefield causalities, enhanced training and early testing of small arms, refinement of battle damage assessment and repair and the training to perform it, and shipboard safety and survivability assessment and training. These enhancements have joint Service applicability and offer opportunities for use by other federal agencies as well as state/local governments.

Management oversight of this initiative is provided by a Senior Advisory Group comprised of the Deputy Director, DOT&E/LFT and the four senior military leaders of the DoD training, modeling and simulation commands located in Orlando, Florida. The LFT&TI program has been recognized by the Congress as a highly successful initiative that requires continued outyear funding support to provide sustainable technology infusion into both the live fire test and military training.

T&E Resources for the Future

The RMA adds urgency to the necessity to update our aging test infrastructure. For example, ballistic missile defense requires improvements in:

- BMD intercept lethality assessment methodology.

- A realistic 3km/sec live fire T&E capability.

- Kwajalein Missile Range upgrades.

- Debris tracking and modeling.

- A cooperative engagement capability, distributed theater-level testbed.

- Improvements in high-precision tracking.

- Fast, long-range targets.

In general, the infrastructure for testing is in need of modernization. Sixty-seven percent of the major range and test facility base is over 30 years old. Modernization funding, I believe, could come from savings earned by applying best business practices to testing in accordance with the Defense Reform Initiative. Current features of the testing infrastructure and community make that difficult today. For example, there are various different accounting systems spread across the Services and ranges that make even the elementary task of cost accounting and comparison next to impossible. In addition, there is a labyrinth of boards, councils, and committees that make decisive action cumbersome. While many Service reductions in testing capability, people, and infrastructure have been made in recent years (and these will continue), there is no coherent plan to reinvest any of those savings in modernizing the infrastructure that is left.

Without upgrading our infrastructure, much of the RMA will remain a

promise without proof.

OPERATIONAL TEST AGENCIES

The pressures on the Services' Operational Test Agencies (OTAs) reported last year continue and grow. As a way of sharing the insights each Service has gained in dealing with their challenges, I sponsored two gatherings of the commanders of the OTAs, the Service T&E Executives, and myself during 1997.

The Service OTAs are doing an outstanding job under severe budget pressures. At test ranges and test centers all across the country, testing has been cut across the board, and is continuing to be cut. The Service OTAs are no exception. They are doing very important work for which there is no substitute, and their workload is increasing. For example, the Navy?s COMOPTEVFOR has over 400 programs requiring operational testing, more than at any time in its 51-year history.

The Army's OPTEC is supporting rapid acquisition programs as well as many other programs large and small. Further, in 1997, OPTEC was assigned new responsibility for live fire evaluation and new responsibility to consolidate evaluation for both DT and OT Army-wide. The Army has integrated developmental and operational testing within a single process. Under the Integrated Test and Evaluation (ITE) concept, data collected during demonstrations, experiments, contractor testing, modeling and simulation, and developmental testing can be used to reduce operational test and evaluation requirements. OPTEC's implementation of ITE provides the Army with a Single Evaluator involved in the early phases of a system's life cycle. OPTEC does this through the use of command-wide integrated product teams whose membership includes both developmental and operational testers and evaluators. OPTEC is an active participant in Advanced Warfighting Experiments (AWEs). AWEs insert experiementation, with OPTEC assessments into operational training events. OPTEC participates in AWEs as a member of AWE Integrated Product Development Team. OPTEC supports the writing of AWE experimentation plans and independent assessment reports. OPTEC supports the Warfighting Rapid Acquisition Program (WRAP) by providing both expertise and conducting assessments. The use of the independent assessments supports the WRAP candidate selection process. OPTEC provides guidance and planning for collection and evaluation of data to support WRAP ASARC decisions. In FY97, OPTEC assessed 23 WRAP candidates. Six assessments were conducted during the Division XXI AWE. While I strongly support all these new responsibilities for OPTEC, it did not receive adequate resources for these new responsibilities and has been cut since even further, with still further cuts pending.

AFOTEC has new responsibilities for testing in streamlined acquisition, in support of the Air Force Battle Labs, in compartmented programs and support to the Unified Commands, and as the lead OTA on certain DoD-wide programs. In contrast to these new responsibilities, AFOTEC's budget has been notably reduced in FY98, and current projections are for similar reductions in the future.

There are several other new responsibilities that are adding to the workload and budget requirements at the OTAs. For example, in accordance with SECDEF direction, all the OTAs are now supporting ACTDs to bring operational test insights to ACTDs as early as possible. This is helping ACTD programs to understand and address the operational challenges they face much earlier. In addition, the SECDEF principles of early involvement, combining testing where possible ( e.g., DT with OT), doing testing with training exercises (to save money and increase operational realism), and making better use of modeling and simulation, all require new effort from the OTAs. The conduct of early operational assessments done early in a program can save millions of dollars, and all the OTAs are doing such assessments. However, they are being asked to take on these responsibilities without adversely impacting their regular work, without new resources, and even after their budgets are cut.

A new challenge for the operational test community is developing appropriate test programs under Acquisition Reform. For example, we can and should take advantage of contractor testing performed on commercial off-the-shelf (COTS) and nondevelopmental items (NDI), if that testing is realistically stressing. However, some COTS programs may not experience adequate operational or live fire testing solely at the contractor level. The C-130J and the T-3 are examples. My policy, and that of the OTAs, is that military systems must be tested in accordance with how they are intended to be used, not with how they are bought. Systems used by our troops when they are in harm's way must be tested in realistic combat conditions so that military commanders and users can know what to expect. Operational tests establish both the capabilities and limitations of military systems in operational conditions. Of course it is also important to know whether a particular detailed performance parameter or threshold has been met or not met, and this should happen in contractor testing as well. But to a soldier using equipment in combat, knowing its operational capabilities and limitations is at least as important as whether a particular technical specification has been met.

The work of the Service OTAs is certainly one of the best bargains, dollar for dollar, anywhere in the Department of Defense. Through the operational test and evaluations conducted by the OTAs, acquisition programs and warfighters know exactly what works, what doesn't, and why. The OTAs illuminate both the strengths and weaknesses of new military systems and help soldiers, sailors, airmen, and marines understand the characteristics and limitations of military equipment for operational use.

The Service OTAs are all very well respected and valued for their contributions. To continue their work, the OTAs need autonomy, stability, and independence. Stability is easily threatened by continuing funding cuts. To do their mission well requires high-quality professionals. Attracting and retaining high-quality professionals requires the OTAs to be stable, secure, and well supported at the highest levels in the Military Services.

This office initiated and led a significant effort with the Army, Navy

and Air Force T&E Executives to draft legislation to improve the existent

Live Fire Test Legislation, title 10, Section 2366, and reached consensus

with them late in the year. This draft legislative package has been forwarded

to the Department's General Counsel for further coordination. It has a

number of benefits including the clarification of several terms, as well

as addressing the live fire testing of COTS and NDIs to be used by our

military forces.

MAJOR REPORTS

We completed four formal reports on the operational test and evaluation of weapons systems and submitted them to Congress. These reports (JAVELIN, JTIDS, EPLRS, and E-3 AWACS RSIP) are bound separately and are available on request as a classified annex to this annual report.

This annual report responds to statutory requirements. No waivers were granted to subsection 2399 (e)(1) of title 10 United States Code, pursuant to subsection 2399 (e)(2). Members of my staff and I will be pleased to provide additional information as appropriate.

Philip E. Coyle

Director

|

NEWSLETTER

|

| Join the GlobalSecurity.org mailing list |

|

|

|