|

|

||

| Home | Search | ||

| About | Publications | Organization | Related Sites |

DIRECTOR'S INTRODUCTION

In my first Annual Report (1994), I identified what I believed, and still believe, to be the primary mission of operational testing. Operational Test and Evaluation (OT&E) must help ensure that when our soldiers, sailors, airmen, and marines must go into harm's way, they take with them weapons that work.

During the six years I have served as Director, Operational Test and Evaluation (DOT&E), the responsibilities and functions of the organization have grown significantly. In 1994, the Congress added Live Fire Test and Evaluation (LFT&E) to the DOT&E portfolio of responsibilities. The integration of the LFT&E Program into our mission has enabled us to examine and report weapons effectiveness, suitability, and survivability together. LFT&E is primarily driven by the physics of the failure mechanisms, while OT&E is heavily driven by the battle environment, and the tactics and doctrine practiced. Evaluating these factors together provides a more complete picture of overall effectiveness.

In June 1999, the Secretary of Defense approved a reorganization of Test and Evaluation (T&E) within the Office of the Secretary of Defense (OSD). This reorganization vested DOT&E with responsibility for the T&E infrastructure including stewardship of the Major Range and Test Facility Base (MRTFB) and management of the Central Test and Evaluation Investment Program. These new responsibilities have helped to streamline defense acquisition with contributions from test personnel earlier in the life of an acquisition program. The reorganization also helps ensure that weapons systems are realistically and adequately tested and support complete and accurate evaluations of operational effectiveness, suitability, and survivability/lethality to the Secretary of Defense, other decision makers in the Department of Defense, and Congress.

THE STATE OF TESTING IN THE DEPARTMENT OF DEFENSE

During my tenure as the Director, Operational Test and Evaluation, I have expressed concern over the growing gap between T&E requirements and T&E resources. While T&E requirements and the complexity of weapon systems under test have increased, the resources for test and evaluation have declined dramatically. The greatest challenge has been to absorb the significant T&E personnel and funding reductions associated with defense downsizing while continuing to accomplish the T&E mission. Some of these reductions were accommodated through business process reengineering and investments that promoted efficiency. For example, the Kwajalein Modernization and Remoting Project, scheduled for completion in FY03, will enable Kwajalein Missile Range to operate with the 20 percent reduction in staff and $17 million a year reduction in annual operating costs that have already been taken from their budget. Unfortunately, many other reductions resulted in less testing being done to support acquisition programs and delays in needed upgrades and repairs at test facilities. The impact of reductions can be seen in the doubling of Army systems that failed to meet reliability requirements in Operational Testing (OT) between FY96-FY00 compared to FY85-FY90. The impact of increasing test facility equipment failures can be seen at the Arnold Engineering Development Center in Tennessee, where failures in plant heater, transformer and motor equipment, and a propulsion wind tunnel starting motor resulted in unexpected repair costs of $4.6 million during FY00 and delays of test programs from one fiscal year to the next.

Pressures from tight budgets and schedules have caused acquisition program managers to cut back on developmental testing to save time and money. Yet, reductions in developmental testing only postpone the discovery of problems to operational testing when they are more expensive and difficult to address. Program managers control the content and execution of the developmental test program, as I believe they should. However, cost and schedule pressures are increasingly causing program managers to accept more risk and it is showing up as performance shortfalls in operational testing.

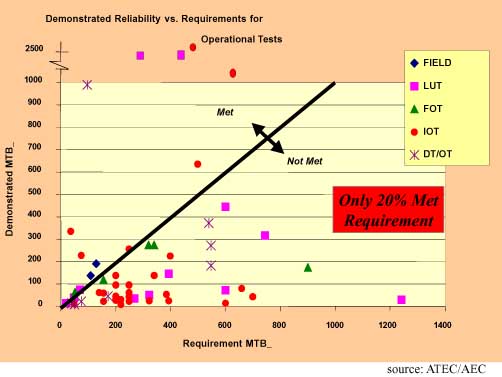

There has been a disturbing trend of programs entering dedicated OT&E without having completed sufficient operationally relevant developmental test and evaluation. In recent years, 66 percent of Air Force programs have had to stop operational testing because the system under test was not ready for operational test due to some major system or safety shortcoming. Since 1996, approximately 80 percent of Army systems tested failed to achieve even half of their reliability requirements during operational testing.

Figure 1. Army Operational Test - Demonstrated Reliability Versus Requirements

The Marine Corps V-22 Osprey program reduced developmental testing due to cost and schedule pressures. The original Flight Control System Developmental and Flying Qualities Demonstration Test Plan call for 103 test conditions to be flown. In an effort to recover cost and schedule, the conditions to be tested were reduced to 49, focusing on aft center of gravity conditions thought to be most critical. Of the 49 conditions, 33 were flight-tested.

I am also concerned about the Navy's use of waivers. In the case of the V-22 Osprey, developmental testing showed that the V-22 had failed to meet established thresholds for overall mean time between failures and for false alarm rate of the Built-In Test system. The Navy waived these criteria. In addition, the Navy approved waivers for test requirements identified in the Test and Evaluation Master Plan. These included tests of operations in icing conditions or performing air combat maneuvering. Such waivers are permitted under Navy acquisition instructions. DOT&E does not endorse this practice and does not view the Joint Operational Requirements Document requirements as waived for our evaluations. Waivers for significant performance and test parameters should be discouraged.

Each of the Services has been looking into these trends and has initiated steps to address them. DOT&E will continue to monitor these trends and work with the Services to make appropriate process changes.

THE GAP BETWEEN T&E WORKLOAD AND T&E RESOURCES CONTINUES TO GROW

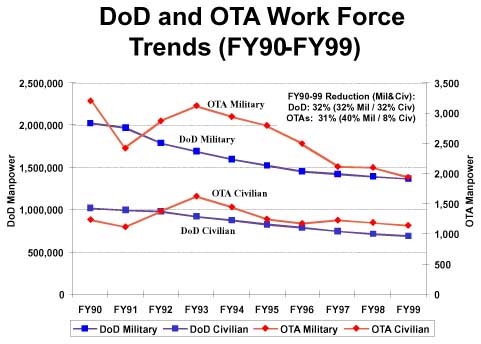

Major Range and Test Facility Base workload has remained robust for many years and is increasing at some MRTFB activities, yet funding for T&E infrastructure and investment has steadily declined. OT&E costs are a minuscule part of acquisition programs-- typically less than 1 percent-- and developmental testing is typically only a few percent, yet testing capabilities are under constant attack during the Department's budget processes. Operating and investment funding has been reduced approximately $1 billion a year compared to FY90 levels, a 30 percent reduction totaling $8 billion between FY90-FY01. The Service test ranges do their best with old equipment and facilities to test the newest, most modern weapon systems. Compounding the problem is the loss of military and civilian personnel who perform test and evaluation. MRTFB manpower has been reduced 32 percent or approximately 14,000 people. Military manning levels have been reduced 45 percent, dramatically decreasing military participation in early T&E. Military manning levels at Army MRTFB activities have been reduced approximately 99 percent. In 1995, I began saying that we must reverse this trend, and I have worked hard to do so, with limited success.

The Service Operational Test Agencies (OTAs) are also under severe resources pressures. The challenges of personnel downsizing and budget cutting have diminished their strength and ability to do their mission. As the Director, Operational Test and Evaluation, I have worked hard to resist institutional actions by the Services that could adversely affect the strength, independence, or objectivity of the OTAs. The Service OTAs continue to be one of the best bargains, dollar for dollar, anywhere in the Department of Defense. Yet, the Service OTAs continue to struggle with the gap between OT&E requirements and resources.

Army Operational Test Command

Navy Operational Test and Evaluation Force (COMOPTEVFOR)

Marine Corps Operational Test and Evaluation Activity

Air Force Operational Test and Evaluation Center

In its 1999 report, the Defense Science Board (DSB) Task Force on Test and Evaluation recommended, "The focus of T&E should be on optimizing support to the acquisition process, not on minimizing (or even 'optimizing') T&E capacity." Despite the wisdom of this recommendation, there continues to be a lack of adequate T&E resources necessary to support the acquisition process. In FY01, the Army Test and Evaluation Command is facing a shortfall of $8.7 million for the conduct of approximately 39 Acquisition Category II-IV operational tests. The Air Force Operational Test and Evaluation Center is facing a potential funding shortfall for operational test requirements that could affect 20 of 49 test programs. The Marine Corps Operational Test and Evaluation Activity does not have adequate resources to support nuclear, chemical, and biological defense OT requirements. The Navy COMOPTEVFOR is dependent on acquisition programs to fund early participation by operational testers in acquisition programs when deficiencies are least costly to fix.

When the Service OTAs do not have adequate resources to accomplish their mission, acquisition programs are either delayed or forced to fund the shortfalls by diverting funds from other activities. In some cases, constrained operational test budgets force the Service OTAs to only focus on the highest profile programs, with small and medium-sized programs proceeding into production without formal evaluation and reporting by the OTAs. The House of Representatives Committee Report on the Defense Appropriations Bill for Fiscal Year 2001 accurately stated that operational testing, especially in the smaller programs, is not being adequately funded.

|

"The Committee is concerned that the Military Departments are not adequately budgeting for operational testing. The Committee understands that severely constrained operational test budgets are forcing the Services' operational test communities to focus reporting only on the highest profile programs with small and medium sized programs proceeding into production without formal reporting from the operational test community. The Committee believes that this situation must be corrected and fully expects the Military Departments to budget adequately to ensure all programs benefit from an appropriate level of independent operational testing." House of Representatives Committee on Appropriations Report 106-644 Department of Defense Appropriations Bill, 2001 |

Inadequate manning at OTAs and MRTFBs, including military manning, also has a detrimental impact on acquisition. For many years, I have pointed out that the loss of soldiers at Army MRTFB activities has hurt Army developmental testing and put Army acquisition programs at risk when they reach initial operational test and evaluation. I was surprised to learn that the FY01 Army Officer Distribution Plan would provide officers to the Army Test and Evaluation Command at even lower levels than in the past. I have worked with the Army to try to address this situation. However, the FY01 Army Officer Distribution Plan still provides only 65 percent of its authorized officers. I do not believe that this is adequate to ensure mission accomplishment. The problem is particularly critical when it comes to Majors and Captains, which are Test Officer positions in the Operational Test Command and the Evaluators in the Army Evaluation Command. I am also concerned about the Marine Corps Operational Test and Evaluation Activity's ability to adequately accomplish its mission with only 21 military and 12 civilian personnel.

T&E INVESTMENT IS NOT KEEPING PACE WITH WEAPON SYSTEMS TECHNOLOGY

I remain concerned over the low rate of investment in T&E capability. Since FY90, T&E investment levels have been reduced more than 28 percent while military construction funding for T&E facilities has decreased over 90 percent. Clearly, additional funding is necessary to maintain the aging capabilities and keep pace with advancing weapon systems technologies.

Weapon technologies are outdistancing our ability to adequately test systems as they are developed. My office has worked with the Deputy Under Secretary of Defense for Science and Technology (S&T) to establish an S&T program for T&E to accelerate the development of critical technologies for T&E. An applied research and advanced technology development program to exploit new technologies and processes to meet important T&E requirements, and expedite transition of these technologies from the laboratory to the T&E community, will ensure that technology is in place in time to support critical test events. This science and technology program for T&E will provide the essential knowledge base and lay the groundwork for building the T&E capabilities of the future.

The Air Force has conducted several excellent studies that point to the need for a designated range complex to provide testing for on-orbit systems to verify space system performance, prove system utility and expanded space systems concepts, inject space systems participation into training and exercises to enhance realism, and build warfighter confidence analogous to the air test ranges. Basic space test capabilities exist, but are not sufficient to meet space mission area testing requirements. Probable infrastructure needs include improved instrumentation; improved connectivity among ranges and range users; improved range scheduling and coordination capabilities; and more traditional test range processes, data collection, and safety control. The Air Force Space Command and Air Force Materiel Command are jointly developing a space test process and range concept. My office is working with these organizations and supports their efforts.

In response to a formal request from Under Secretary of Defense (Acquisition, Technology and Logistics) (USD(AT&L), my office assessed the status of resources to support interoperability testing and identified the need for a hardware-in-the-loop, system-of-system level interoperability network. My office has initiated a proof of concept initiative, leveraging existing assets, to validate the feasibility and utility of the network concept before pursuing a major investment.

There is also a need for a national commitment to new transonic and hypersonic wind tunnel capability. Transonic wind tunnels have made major contributions to aircraft design and performance for the past 50 years and that role is expected to continue. U.S. test facilities that are used for military and commercial aircraft development are almost 50 years old. In the transonic region, our European allies have a new, efficient facility with high data throughput. A new U.S. high reynolds number transonic wind tunnel, which produces data cheaply and efficiently, is essential. This will provide reliable performance data needed by both our military and commercial aircraft designers. Several DoD, NASA, Air Force, and industry studies on this issue over the past 15 years are in agreement as to the criticality of this need. Test facilities that can simulate the high-temperature, high-pressure hypersonic flight environments are also needed to support the development and fielding of air breathing hypersonic flight systems.

The requirements for some of these test needs are well documented, while for others it will take time to develop detailed requirements. But, it is critical that we continue this process to ensure that needed test capabilities and facilities are available to support future weapon systems concepts and integrated warfighting capabilities.

STRATEGIC PLANNING FOR T&E

Since assuming responsibility for OSD stewardship of the MRTFB in September 1999, my office has been working with the Services to address the immediate problems and to undertake a strategic review and planning process to address the long-term health of the T&E community. The T&E Executive Agent (EA), comprised of the Service Vice Chiefs and myself, are working to institutionalize a strategic review of what it will take to bridge the gap between today's capabilities and tomorrow's technology. Our desire is for the Department to have the necessary T&E capabilities to thoroughly and realistically test and evaluate weapons and support systems for the warfighter. The EA has developed a series of goals that focus on the developmental and operational test work force, the decision makers, defense planners, infrastructure investments, policies, strategic partnerships, and test environments.

My office is also actively working with the training community to leverage instrumentation development efforts and reinvigorate strategic planning for instrumentation. As part of the reorganization of OSD T&E discussed above, I became chair of the Defense Test and Training Steering Group (DTTSG). The DTTSG brings together the test and training communities on common themes. Its mission is to oversee requirements, development, and integration of all training and test range instrumentation, and to facilitate the development of a consolidated acquisition policy for training and test capabilities, including embedded test and training capabilities in weapon systems. The Defense Test and Training Steering Group's current major activities are developing Sustainable Ranges Action Plans for the Senior Readiness Oversight Council and the development of a Joint Test and Training Roadmap.

THE SECRETARY'S THEMES

In May 1995, Secretary of Defense William J. Perry articulated five themes that have provided strategic direction for T&E during the last six years. These themes have been guides for change in the T&E process and have been regularly emphasized by Secretary William Cohen. They are:

Implementing these themes can and has saved millions of dollars in major acquisition programs and reduced program cycle times substantially. I believe this next section demonstrates that these themes have been effective in introducing needed change.

Theme 1: EARLY INVOLVEMENT

Operational testers should be involved from the outset when system requirements and contractor Requests for Proposals are being formulated. This early involvement contributes to earlier understanding of how systems will be used once they are fielded and identifies the tools and resources needed to achieve understanding. Proper early planning can also facilitate more efficient use of technical data from developmental testing in the operational test and evaluation process.

Early involvement of operational testers provides early feedback to help acquisition programs address operational issues. These issues are often missed in contractor and developmental testing. As the USD(AT&L) explained in a letter to the chairmen of the four Defense Committees, "I have advocated for many years that serious testing with a view toward operations should be started early in the life of a program. Early testing against operational requirements will provide earlier indications of military usefulness. It is also much less expensive to correct flaws in system design, both hardware and software, if they are identified early in a program. Performance-based acquisition programs reflect our emphasis on satisfying operational requirements vice system specifications."

The Under Secretary of Defense for Acquisition, Technology and Logistics, has consistently supported this approach. For example, in his memo of December 23, 1999, Dr. Gansler recommended to the Service Acquisition Executives that they "seek out the involvement of the operational testers in reviewing the acquisition strategy and operational requirements for all ACAT II, III, and IV level programs as well as ACAT I." He also recommended that the acquisition strategy or operational requirements development not be considered complete until the Service OTA has coordinated on it.

Part of our approach to early involvement is to call for Early Operational Assessments (EOAs). Two of many noteworthy examples of the benefits of early insight can be found in EOAs for the LPD-17, conducted by the Navy's Operational Test and Evaluation Force in 1996, and NMD testing conducted by Army Test and Evaluation Command this year.

While early involvement requires only modest resources, these resources are not available. As budget and personnel pressures have increased on the Operational Test Agencies, their ability to provide early insights to acquisition programs has decreased.

A July 2000 GAO report on "BEST PRACTICES: A More Constructive Test Approach Is Key to Better Weapon System Outcomes" came to a similar conclusion but states it rather differently, namely, that we are testing too late.

|

"To lessen the dependence on testing late in development and to foster a more constructive relationship between program managers and testers, GAO recommends that the Secretary of Defense instruct acquisition managers to structure test plans around the attainment of increasing levels of product maturity, orchestrate the right mix of tools to validate these maturity levels, and build and resource acquisition strategies around this approach. GAO also recommends that validation of lower levels of product maturity not be deferred to the third level. Finally, GAO recommends that the Secretary require that weapon systems demonstrate a specified level of product maturity before major programmatic approvals." GAO Report, July 2000 |

The implementation of earlier testing and evaluation is, of course, very much in the hands of the Acquisition program offices. Testers cannot make this happen alone. The money to do early testing is, for the most part, under the control of the Service Acquisition Executives, Program Executive Offices, and Program Managers, as it should be.

Theme 2: MODELING AND SIMULATION

Test and evaluation should make more effective use of Modeling and Simulation (M&S). Realistic and highly predictive models and simulations are needed that contribute to a real physical understanding of the system being modeled. The kind of M&S that truly contributes to reducing the scope and risks of testing is neither conceptually easy nor inexpensive.

In 1996, the Under Secretary of Defense (Acquisition & Technology), Dr. Paul Kaminski, directed that plans for M&S should become an integral part of every Test and Evaluation Master Plan. He said, "This means our underlying approach will be to model first, simulate, then test, and then iterate the test results back into the model." The reality is that our program management structure often does not make the investments necessary for M&S to be an effective contributor. In part, this is because the payoff from using modeling and simulation is often years down the road from the point of commitment of funds. Accordingly, the program manager can expect that he or she will be long gone before the benefits arise. This is also true in acquisition program support of the T&E infrastructure.

For this reason, a 1999 Defense Science Board Task Force on Test and Evaluation recommended that the acquisition process require and fund an M&S plan at the earliest practical point in a program. The DSB recommended that oversight and direction of M&S development and employment for T&E be carried out by an OSD T&E organization.

Since that DSB Task Force, my office sponsored a survey of M&S practices in acquisition programs. That survey indicated fewer than half of the programs had an M&S staff, fewer than half had a "collaborative environment" for M&S, and less than two-thirds had an M&S plan or mentioned M&S in the contract. The USD(AT&L) incorporated five recommendations from the survey results in the upcoming version of DoD 5000.2-R (Mandatory Procedures for Major Defense Acquisition Programs and Major Automated Information System Acquisition Programs). These recommendations are that:

If implemented, this should go a long way toward improving the situation.

Theme 3: COMBINING TESTS

A combined developmental/operational test period is now common in most test programs. Two-thirds of the programs under our oversight now use a combined developmental/operational test period as part of the overall test program. The effective combination depends on early efforts by the OTAs to make the developmental testing more realistic, complete, and operational. Progress here has been constrained by the resource pressures on the OTAs. Of course, there is still a need for a period of dedicated OT&E independent of developer and contractor.

This theme can be applied to interoperability testing as well. For example, it is possible to combine the operational tests of different systems, something that is necessary in testing the Department's system-of-systems concepts. Some progress has been made, for example, in combining the M1A2 System Enhancement Package and Bradley A3 operational tests.

Theme 4: TESTING AND TRAINING

Training exercises often provide the complex environment and the kind of stressing conditions needed for operational testing. Similarly, operational tests can add threat realism and rigorous data collection that can be valuable to training. Together, testing and training employ many of the same resources, often at the same range. Combining testing and training can yield big returns for relatively small investments of money and personnel. Many of the programs under our oversight now combine test and training events to gather operational test data.

Since systems are sometimes operationally deployed before OT, we have used experience from operational deployments as a complement to, or substitute for, some aspects of operational tests. An example is the Joint Surveillance Target Attack Radar System (JSTARS) and its Common Ground Station (CGS). JSTARS represented the first large-scale application of this approach during the deployment of JSTARS in Operation Joint Endeavor in Bosnia and for the CGS during exercises in Korea this year.

Theme 5: ADVANCED CONCEPT TECHNOLOGY DEMONSTRATIONS

The fifth and final theme is the importance of using operational testing to support Advanced Concept Technology Demonstration (ACTD) programs. The challenge is to apply the techniques of operational testing in ways that support the ACTD process. To meet this challenge, testers will confront situations where the requirements definition process may be very informal and subject to change. Here again, the emphasis must be on providing understanding regarding an ACTD's contribution to military utility and being creative in defining ways to provide that insight. A critical element to success in ACTD testing is the establishment of effective working partnerships with the Commanders in Chief (CINC). ACTD programs are typically sponsored by a CINC. Just as in the traditional acquisition programs, testing in the ACTD context must be aimed at providing early insight and understanding.

The Predator unmanned aerial vehicle provides an excellent example of bringing operational test insights into play in an ACTD. Here the OT&E community was involved early, used DT test data to help assess system capabilities, and participated in observing and then evaluating the system's experience during deployment in Bosnia.

I believe the "bottom line" cumulative effect of the five themes can be summarized in four wordsó"making it all count." Earlier involvement, better use of M&S, combined testing, innovative OT&E in the ACTD process, and combining of testing and training are all ways to maximize the contributions of the T&E community and "make them all count."

DOT&E RESOURCES

In FY00, DOT&E programs were funded at $217.722 million. The table below provides a breakout of the DOT&E appropriation. The $7.0 million congressional add for the Live Fire Testing and Training Initiative is included in the Live Fire Testing program. Congressional support for this initiative has been steadfast. The Central Test and Evaluation Investment Program funding includes the following congressionally directed programs: Roadway Simulator ($10 million), Airborne Separation Video System ($4 million), and the Magdalena Ridge Observatory Program ($3.5 million).

|

Program Element |

FY00 |

|

Operational Test and Evaluation |

$14.602M |

|

Live Fire Testing |

$16.669M |

|

Test and Evaluation |

$53.585M |

|

Central Test and Evaluation Investment Program |

$132.866M |

|

Total |

$217.722M |

LIVE FIRE

Fiscal Year 2000 saw the culmination of efforts initiated by DOT&E in July 1998. In 1998, DOT&E initiated an effort to clarify LFT&E policy within DoD. Together with Army, Navy, and Air Force Test and Evaluation executives, we drafted new regulations consistent with Live Fire Test legislation. In 2000, these changes were incorporated into DoD Regulations (DoD 5000.2-R). The changes: (1) clarify existing DOT&E policy requiring M&S predictions prior to Live Fire tests; (2) require evaluation of U.S. platform vulnerability to validated directed energy weapon threats; and (3) define LFT&E procedures and requirements for programs lacking a defined EMD or B-LRIP milestone.

My Live Fire Directorate has been very active in making the best use of modeling and simulation in T&E. The Live Fire Test program, perhaps more than any other testing activity, continues to add discipline to the exercise and evaluation of modeling and simulation in support of acquisition. Live Fire Test policy requires a model prediction to be made prior to every Live Fire Test. This policy has focused attention on test instrumentation, test issues, and shot sequencing, as well as model adequacy.

DOT&E was responsible for the oversight of 90 LFT&E acquisition programs during FY00.

NEW OPERATIONAL TEST AND EVALUATION POLICIES

On November 17, 1999, my office issued an Information Assurance Test and Evaluation Policy, which included guidelines on metrics for operational testing for information assurance. These guidelines are provided as a resource to assist testers and program managers in properly implementing the policy. In addition, we sponsored the first annual Information Assurance T&E Conference in Albuquerque, NM, from October 31-November 2, 2000. This conference brought together a broad cross-section of personnel with both T&E and information assurance backgrounds from the Services, DoD, and other government agencies, as well as industry and academic representatives.

On October 25, 1999, my office issued policy on Operational Test and Evaluation of Electromagnetic Environmental Effects (E3). Our efforts in FY00 focused on working with the Service Operational Test Agencies and the Joint Spectrum Center (JSC) to develop the implementation plan for the policy. Coordination has taken place via monthly DoD E3 integrated planning team meetings. The JSC developed a draft guidance document on E3 assessment for program managers. DOT&E published a "how to" document in the Program Manager magazine. In addition, DOT&E hosted the first workshop on E3/SpectrumManagement testing in September 2000.

CINC PARTNERSHIPS

As noted earlier, the Secretary of Defense has asked the Director, Operational Test and Evaluation, to help provide greater support for the warfighting CINCs. During 1996, we began several initiatives. One of these initiatives is making good use of CINC exercises for operational tests of military equipment. As stated above, a second initiative with the CINCs is to bring operational test insights to ACTDs. The CINCs are the sponsors of ACTDs, and a close working relationship with them in the testing of ACTDs is essential. I consider both of these initiatives successes.

Secretary Cohen has said, "It is essential that new operational concepts be tested by a full range of joint and Service warfighting experiments to develop a new joint doctrine." As new operational concepts and technologies are proven, they will lead to changes in the organization, employment of forces, and doctrine. Operational testing is an important way in which new concepts are tested, and operational testing can contribute further in this arena. This was the recommendation of the Blue Ribbon Panel in 1972, and remains particularly applicable today as the military is transformed in accordance with the Defense Planning Guidance.

The Year 2000 (Y2K) challenge created an opportunity for DOT&E to provide direct T&E support to the Unified and Specified Commands. A Deputy Secretary of Defense memo dated August 24, 1998, directed DOT&E to provide technical assistance in support of JCS-directed Y2K activities. DOT&E provided the CINCs with highly qualified OT&E support to the Y2K assessment process. Following on the success of that initiative, DOT&E is providing direct on-site support to the CINCs at their request.

The 1999 Unified Command Plan (UCP-99) tasks the CINCs to conduct outcome-based interoperability assessments of fielded systems and to provide readiness implications of issues and deficiencies identified within their area of responsibility. In support of this, DOT&E provides on-site and reach-back OT&E support to advise the CINCs on how best to focus evaluation objectives; develop evaluation strategies; design test and evaluation plans/programs; evaluate operational performance; and identify and track corrective actions. This OT&E support helps relate operational issues, evaluation objectives, measures of effectiveness, and data collection requirements to operational requirements developed with quantifiable and testable metrics. This support is integrated with military exercises, operational assessments, Advanced Concept Technology Demonstrations, joint experiments, joint test and evaluations, and other mission-based operational performance evaluations to address readiness implications within the individual commands.

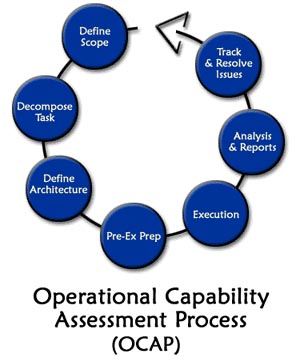

The Y2K effort became a model for our CINC partnerships. Since then, we have worked with the CINCs to institutionalize an Operational Capability Assessment Process to capture what is learned, track the solution, and confirm it in subsequent exercises. In conjunction with U.S. Joint Forces Command, DOT&E is sponsoring development of a set of software tools designed to further assist the warfighting CINCs in assessing outcome-based interoperability and the impact on mission-based operational capabilities. On-site support includes guiding development of these web-based tools to suit the specific needs of the individual CINCs. Battle Laboratories and Operational Test Agencies may use these same tools to help assess Advanced Technology Demonstrators and ACTDs for CINCs, as well as conduct early operational assessments for acquisition systems.

EXTERNAL REVIEWS BY THE DEFENSE SCIENCE BOARD

DOT&E regularly supports external reviews of our performance. In the fall of 1999, the Defense Science Board Task Force issued a very positive report with many helpful findings. These findings were summarized in our 1999 Annual Report.

In 2000, there was a new review by the Defense Science Board Task Force on Test and Evaluation. Section 913 of the Fiscal Year 2000 Defense Authorization Act directed the Secretary of Defense to convene a panel of experts, under the auspices of the Defense Science Board, to conduct an analysis of the resources and capabilities of the Department of Defense, including those of the military departments. This new study was to identify opportunities to achieve efficiencies, reduce duplication, and consolidate responsibilities in order to have a national T&E capability that meets the challenges of Joint Vision 2010 and beyond. The new study of the Defense Science Board Task Force on Test and Evaluation Capabilities was conducted during 2000. The final report is in coordination at this time.

EXTERNAL REVIEW BY THE NATIONAL RESEARCH COUNCIL ON TESTING FOR RELIABILITY

The 1998 National Research Council study of Statistics, Testing and Defense Acquisition recommended that, "The Department of Defense and the military services should give increased attention to their reliability, availability, and maintainability data collection and analysis procedures because deficiencies continue to be responsible for many of the current field problems and concerns about military readiness." (Recommendation 7.1, Page 105)

In response to this recommendation and other observations on the state of current practice in DoD compared to industry best practices, the Department asked the National Academies to host a workshop on reliability. The first workshop was held June 9-10, 2000, at the National Academy in Washington. Speakers and participants were from industry, academia, and the Department of Defense. The suggestions from the workshop will be part of an effort to implement the recommendation to produce a "... new battery of military handbooks containing a modern treatment of all pertinent topics in the fields of reliability and life testing ..." (Recommendation 7.12, Page 126). DoD Handbook 5235.1-H, Test and Evaluation of System Reliability, Availability, and Maintainability: A Primer was last updated in 1982.

A second workshop on software-intensive systems will be held next year.

MAJOR REPORTS

In FY00 and to date in FY01, there have been 14 formal reports on the OT&E and LFT&E of weapons systems for submittal to Congress. These reports are bound separately and available on request as an annex to the classified version of this annual report.

This annual report responds to statutory requirements. No waivers were granted to subsection 2399 (e)(1), Title 10, United States Code, pursuant to subsection 2399 (e)(2). Members of my staff and I will be happy to provide additional information as appropriate.

|

NEWSLETTER

|

| Join the GlobalSecurity.org mailing list |

|

|

|